By: Maida Salkanović

Beneath the dark-framed glasses of Daniel Smith, a supposed British expert on Western Balkans affairs, lies the gaze of a man burdened with the complexities of Southeast Europe (SEE). Known for his firm stance on issues like Kosovo’s independence and advocating for the expulsion of the Russian ambassador in Bosnia-Herzegovina, Smith’s insights have been sought after by major media outlets across SEE. With his expertise on Russian influence and political advisory gracing the pages of several reputable publications, Smith has become a familiar figure in the discourse on Balkan geopolitics. Yet, in a startling revelation that challenges the very fabric of journalistic integrity, it emerges that Daniel Smith, the esteemed connoisseur of Balkan affairs, never existed.

In early 2023, Danas, a Serbian newspaper, published an interview with a figure introduced as a “British international affairs and security expert” who spoke about the Russian influence within Serbia and the broader Western Balkans landscape. Smith made a compelling case for the Serbian president, Aleksandar Vučić, to acknowledge the independence of Kosovo, framing it as an undeniable reality already in effect.

Prior to this feature, Smith had already lent his insights to Al Jazeera Balkans through two interviews and had found his way into the discourse within Albanian media circles. This sequence of appearances had painted Smith as a figure of authority and expertise, convincing Danas of his legitimacy. Consistently, Smith had offered the same visage across all his interactions, presenting a singular image to represent his identity.

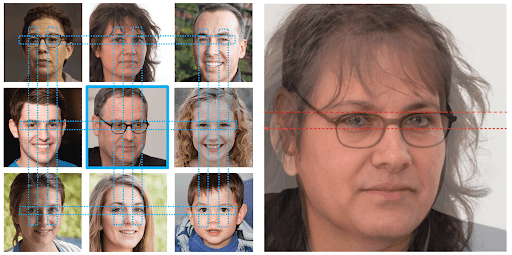

Following the publication of the Danas article, readers expressed doubts about Smith’s authenticity, leading to the deletion of his Twitter profile, which had served as the primary avenue for engaging with him. In the wake of this controversy, the Serbian fact-checking entity, Fake News Tragač (FNT), headquartered in Novi Sad, scrutinized Smith’s photograph. Their analysis suggested that the image bore the hallmarks of being crafted by artificial intelligence (AI), with specific reference to the website “This Person Does Not Exist.”

FNT’s investigative report shed light on the distinctive characteristics of AI-generated images, noting the uniform square shape and the peculiar alignment of the eyes—traits meticulously designed to mirror those found in other fabricated personas from the same digital creation site.

FNT further detailed the indicators of AI-generated imagery, noting that such photos often have abstract, blurred backgrounds and display errors in the integration of accessories like glasses, jewelry, and scarves. The photograph of Smith, for instance, showcased precisely centered eyes, a pair of glasses marred by an anomalous frame, and an odd blurring effect beneath the right eyepiece. Moreover, an unusual bulge was noticeable upon his neck, further arousing suspicion regarding the image’s authenticity.

This revelation has cast a spotlight on the urgent need for vigilance among journalists and fact-checkers across Southeast Europe, underscoring the sophisticated capabilities of AI in manufacturing not only compelling narratives but entirely fabricated identities. The Daniel Smith debacle has thrust into the forefront the critical demand for advanced verification mechanisms and methodologies.

This incident not only highlights the escalating challenge of AI-generated disinformation within Southeast Europe but also prompts a crucial discourse on the preparedness of media professionals and the general populace to identify and neutralize these intricate digital deceptions. It underscores the imperative for the journalistic and fact-checking communities to adapt and evolve in response to the burgeoning threat of artificial narratives, ensuring the integrity of information in an increasingly complex digital landscape.

The Evolution of AI

Artificial Intelligence (AI) encompasses various aspects of computer learning, allowing machines to perform tasks typically associated with human intelligence, such as reading, writing, talking, creating, playing, analyzing, and making recommendations. The recent surge in generative AI technology, like ChatGPT and Bard, which can create user-prompted content, has propelled AI into mainstream discussions, amassing over 100 million users in 2023 alone. While the film industry has long fueled AI-related fears with movies such as “Terminator” and, more recently, “M3gan”, the emergence of AI also raises concerns among fact-checkers about its role in generating disinformation, a challenge increasingly encountered by the SEE Check Network’s fact-checking newsrooms.

The fact-checking platforms in Southeast Europe (SEE) have noticed a significant evolution in AI-generated disinformation, making it increasingly difficult to distinguish from real content. Stefan Kosanović from Belgrade-based Raskrikavanje.rs highlighted the advancements in AI technologies, pointing out that there is a huge difference in AI-generated videos from just a year ago and today. Kosanović also mentioned new softwares, such as OpenAI’s Sora, emphasizing its potential for creating hyper-realistic videos.

“It’s incredible how realistically it portrays animals, nature, and even parades packed with people. I think there are endless ways this program can be used, and one of them is certainly disinformation,” said Kosanović for SEE Check.

Until now, fact-checkers in SEE have primarily focused on debunking AI-created photos and deepfake videos, a task that was relatively straightforward due to the primitive nature of the tools employed for creating such content.

“Until now what we encountered was so obvious and did not gain a lot of virality so we decided to not fact-check it,” said Žana Erznožnik, an editor at Slovenian Razkrinkavanje. Although the current volume and complexity of AI-generated disinformation do not pose a major concern, fact-checkers remain vigilant, anticipating a rise in both the quantity and sophistication of AI-created content. “The volume of such disinformation is still minimal compared to traditional forms, but the trend indicates that this is likely to change,” observed Rašid Krupalija, an editor at Sarajevo based Raskrinkavanje.ba.

However, determining the exact proportion of circulating textual disinformation that is AI-generated remains elusive.

“Differentiating between AI-generated content and genuine content is becoming nearly impossible,” stated Feđa Kulenović, an information scientist from Sarajevo.

“In some instances, particularly with audio and video, there may be indicators of AI involvement, especially if the prompts are poorly constructed. Nevertheless, the rapid advancement of this technology poses a significant threat. Perhaps our lack of awareness acts as a protective veil, since not knowing the full extent of such information makes us less apprehensive,” Kulenović explained.

Partying with the Pope

As seen in the Daniel Smith example, AI can be used to establish credibility. A popular use of deepfake videos in the region has been to promote health supplements through identity theft. Scammers concoct a simple yet effective scheme: identify a well-regarded or popular figure and produce counterfeit videos showcasing them endorsing their products.

This manipulation has targeted a wide range of public figures, including doctors, politicians, folk singers, TV presenters, leveraging their credibility to market products of dubious quality. Scams that used the likeness of Croatian President Zoran Milanović to commit identity theft were also reported in 2024. Beyond commercial misuse, AI’s capacity extends to undermining authority and tarnishing the reputations of individuals, organizations, or ideologies.

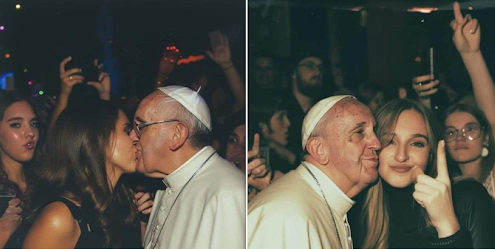

“Those often targeted by creators and disseminators of such deceptive content are usually political leaders, global officials, and public figures, linked to disinformation about their arrests, certain misdemeanors, or inappropriate and deviant behavior. This can be illustrated with photos and deepfake videos related to U.S. Presidents Joe Biden, Donald Trump, Barack Obama, Ukrainian President Volodymyr Zelensky, or Pope Francis,” Jelena Jovanović, executive editor at Podgorica-based Raskrinkavanje told SEE Check.

In one striking instance, activist Greta Thunberg is falsely depicted advocating for “vegan bombs” in armed conflicts. Similarly, another fabrication shows Pope Francis engaging in a party and kissing a woman.

These fabrications might be dismissed as mere jest if the audience were more adept at distinguishing fake imagery. Initially humorous attempts, such as AI-generated videos purporting billionaire Elon Musk hails from Republika Srpska in Bosnia-Herzegovina, professes his love for Serbia, and has swapped his Tesla for a Golf II—a notably aged but popular car in the region—have been taken at face value by some. Although many social media users understand the joke, some have missed the satirical element, sparking nationalistic debates regarding Musk’s supposed preference for Serbia. Yet, some fabrications are glaringly apparent.

“We’ve abandoned certain cases due to their sheer absurdity—for instance, claims suggesting the Nazis constructed the Egyptian pyramids are beyond belief. And there are most likely instances that we have overlooked, which have managed to deceive us as well,” Marija Zemunović, a journalist at Fake News Tragač, admits.

Jelena Jovanović emphasizes the pivotal role of media literacy in combating AI-generated disinformation.

“Starting from early 2023, we’ve been diligently informing citizens about various disinformation instances to heighten their awareness and bolster media literacy. Our aim is to equip individuals with the skills to identify such deceitful content before it can have adverse effects, thus actively curtailing the spread of AI-generated disinformation,” she explains.

Navigating the Digital Age

In the rapidly evolving digital landscape, the proliferation of AI-generated disinformation poses a significant challenge to our ability to discern truth from fabrication. This issue underscores a broader struggle to adapt to a world where disinformation spreads with alarming speed and its creation becomes increasingly effortless.

Journalists, who are on the front lines of information dissemination, have keenly adopted the tools and methodologies of the digital era—perhaps a bit too eagerly. The “Daniel Smith” case is a telling example, since his interviews were conducted exclusively in writing. When a journalist proposed a follow-up video interview amidst rising suspicions about his authenticity, Smith, along with his Twitter presence, vanished into thin air. In the analog days, journalists routinely engaged with their interviewees over the phone or in person, providing an opportunity to pick up on nuances such as accents and language proficiency. A Twitter user noted Smith’s non-native English from his writing—a clue that might have been more readily apparent in a spoken exchange.

Evidently, the digital era introduces new challenges that journalists are only beginning to navigate. Danas, after discovering that Daniel Smith was a fabricated entity, admitted in their correction that they had foregone thorough verification, swayed by Al Jazeera’s prior interviews with Smith, which seemingly lent him credibility.

“We apologize to our readers and the public for misleading them, but we’ve learned the lesson that journalism in the internet age is quite challenging and that we must be much more cautious and verify even what seems to be authentic. Based on our own experience, we will, in collaboration with investigative and fact-checking platforms, pay more attention to the education of journalists and the audience to avoid such situations in the future,” Danas acknowledged.

As AI technologies continue to advance, there is a pressing need for fact-checking organizations to adapt and innovate. This might involve developing new verification tools specifically designed to detect AI-generated content or enhancing the digital literacy of both the public and professionals involved in content creation and verification. The evolution of AI presents a challenge that goes beyond the technical; it calls for a comprehensive approach that encompasses ethical considerations, regulatory frameworks, and public awareness to ensure that the digital information ecosystem remains trustworthy and transparent.

The Promise of AI in Journalism and Fact-Checking

As the digital landscape evolves, AI emerges not only as a challenge but also as a significant potential asset for journalists and fact-checkers. News organizations worldwide are increasingly integrating AI into various facets of news production, spanning from generating topic ideas and translating content to editing and, in some instances, crafting articles. The utilization of AI for content creation, however, remains a contentious topic, with numerous outlets viewing it as a considerable risk to their reputation.

Viola Keta from Faktoje noted: “Sometimes we use CHAT GPT, but we are very cautious knowing that fact-checking is a human job and responsibility. However, we consider that being updated on every AI tool that improves our everyday work would be helpful.”

In the UK, Full Fact, a renowned fact-checking organization, has pioneered the development of an AI system designed to streamline the fact-checking process. This innovative tool assists them and their partners in automating the search for claims, monitoring media outlets and social platforms, and establishing alerts for claims that have been previously verified.

In the South-East Europe (SEE) region, fact-checking organizations are gradually exploring the capabilities of Artificial Intelligence (AI), though their engagement with the technology’s full potential remains nascent. Entities such as Faktograf in Croatia and Raskrikavanje in Serbia have dabbled with AI, particularly in the realm of photo generation, but their application of the technology has been limited.

“We don’t use any AI tool daily; we’ve experimented a bit with photo generators, as they are an interesting solution for illustrating texts. We also tried one for geolocation – you insert a photo, and AI tells you where you are – it wasn’t very successful. Certainly, an improved version of such a tool would be very helpful to us, as well as one that could potentially extract additional metadata from multimedia content,” said Stefan Kosanović from Raskrikavanje.

Jovanović from the Montenegrin Raskrinkavanje highlighted their use of specific AI applications such as “AI or Not” for identifying AI-generated images and “Deepware” to detect deepfakes, emphasizing a meticulous approach to employing multiple verification tools. This cautious stance stems from an understanding of AI’s potential to craft convincing deceptions.

Marija Zemunović from FNT underscored this sentiment: “Given our experience analyzing AI-created content, we’re aware of its potential for deceit, prompting us to approach new tools with a high degree of caution.”

In general, fact-checkers in SEE are open to integrating AI tools into their arsenal, albeit with some caveats. Žana Erznožnik from Razkrinkavanje mentioned a conditional openness to using AI, emphasizing the importance of data security and ethical guidelines in the deployment of AI for fact-checking purposes.

As AI-driven disinformation grows more sophisticated, the demand for equally advanced fact-checking tools rises. To date, fact-checkers are predominantly using the traditional methods, ensuring triangulation of data and approaching each case individually, supplemented with some tools specifically designed for AI content. Still, this approach has its limitations.

“If we cannot find the first post of an AI-generated photo or video using standard search tools, it is almost impossible to confirm that something is indeed AI-generated content,” said Ivica Kristović from the Zagreb-based Faktograf.

Rašid Krupalija from the Bosnian Raskrinkavanje agreed: “Currently, we lack tools specifically designed to debunk AI-generated disinformation like deepfake videos, making the verification of such content more complex. As AI tools advance, the complexity of verifying disinformation created with their help will increase, necessitating tools specifically made for checking such content,” he told SEE check. However, there are certain obstacles to getting those tools.

“We intended to use AI tools for the photos that illustrate our texts and analyses, but so far, we haven’t been able to do so. Some require payment, and others aren’t very helpful. Where AI could definitely contribute is in speeding up reverse image and video searches. The existing tools at our disposal could be much more advanced, and given that AI offers a plethora of possibilities, we likely could find a better digital solution that would make performing daily tasks simpler and more efficient,” said Jelena Jovanović from Montenegrin Raskrinkavanje.

This scenario reveals a broader issue: the disparity between more and less economically developed regions in accessing and utilizing AI tools. The digital divide underscores the unequal opportunities for leveraging AI, which could impact the global fight against disinformation.

In Southeast Europe, as elsewhere, the advent of AI in the realm of fact-checking signals a new era of challenges and opportunities. As AI technologies evolve, becoming more sophisticated and harder to detect, fact-checkers must remain vigilant. The case of “Daniel Smith” serves as a cautionary tale, reminding us of the ever-increasing sophistication of AI-generated disinformation and the imperative for fact-checkers to adapt and evolve in their critical role of safeguarding truth in the digital age.