Original article (in Croatian) was published on 06/11/2025; Author: Anja Vladisavljević

Not all news, photos or videos you see online are what they seem to be. Learn to catch AI “red-handed”

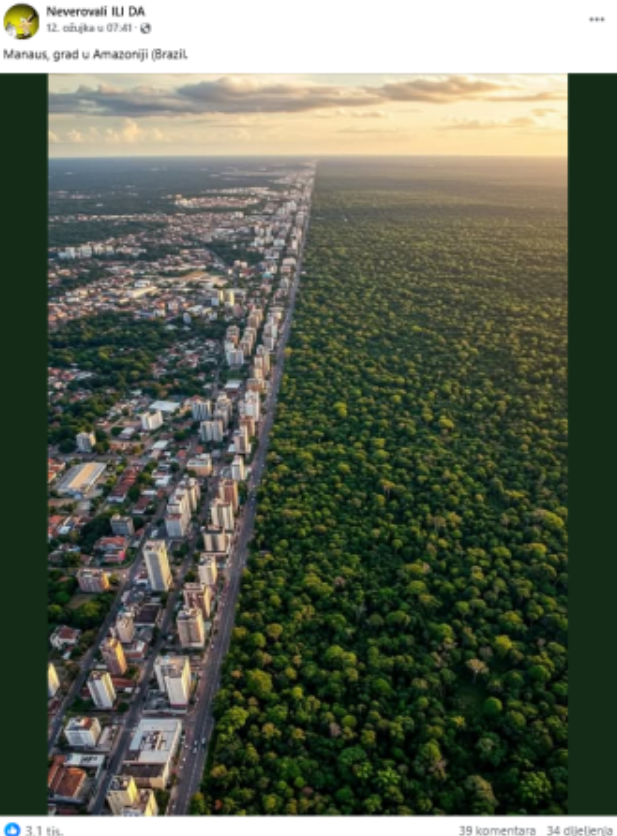

Scrolling through social media, you come across a photo that takes your breath away. You stop scrolling for a moment, and your eyes light up before the perfect scene: compact concrete buildings, roofs, asphalt and steel on one side, and on the other the lush, dense and wild Amazon forest. The division between these two worlds – human civilization and nature – is very sharp, which is, in fact, what makes the photo so impressive.

You think to yourself, “My friends have to see this!”. Without delay, you click “share”. The photo, reportedly taken over the Brazilian city of Manaus, has received a lot of praise and hundreds of likes, with users describing it as an impressive example of the “symbiosis of nature and the city”.

While it is true that parts of Manaus are situated along the edge of the Amazon rainforest, the image which was shared on the internet does not depict a real-life scene. It was generated using artificial intelligence, which we wrote about in March this year.

This is just one of the cases of AI technology being used to generate visual (and other) content that is beginning to look more and more realistic. The motivations for creating such disinformation vary and some, like the one above, are not malicious, at least at first glance (1, 2, 3).

Some people create such content simply for fun or out of curiosity, testing the new technology’s “creativity”. Others use it for inspiration, like presenting idealized versions of landscapes, cities, buildings, people, or animals. However, behind some of this content lie much more serious and malicious intentions, especially when it comes to online scams (1, 2, 3), (information) wars (1, 2) and political campaigns that serve to manipulate public opinion and discredit opponents (1, 2).

That is why it is important to know how to recognize AI-generated content. This article presents some useful tools and instructions on how to protect yourself from these growing forms of digital manipulation.

AI recognition tools

In parallel with the development of tools for creating synthetic and digitally manipulated content, tools for its detection are being developed as well. However, as some experts have pointed out, this is a never-ending process. At the same time as AI detection technology is improving, AI-generated content is getting more realistic.

Among journalists, especially those engaged in exposing false and inauthentic content, several tools are popular (for example, Hive Hoderaion, Deepware Scanner or Winston AI). Once you’ve uploaded the content you want to check, these tools express in percentages the probability of the author being a human vs. a machine.

Researchers from WITNESS, an organization addressing the issue of AI transparency (1, 2), Shirin Anlen and Raquel Vázquez Llorente, compared the reliability of various deepfake detection tools popular among journalists. Their list included Optic, Hive Moderation, V7, Invid, and Deepware Scanner. They showed that these tools can serve as an “excellent starting point” for a more comprehensive screening process, but that they shouldn’t be used on their own – as their results can be challenging to interpret.

This is because most of the results provided by AI detection tools give either a confidence interval or probabilistic determination (e.g. 85% human), whereas others only give a binary “yes/no” result. “It can be challenging to interpret these results without knowing more about the detection model, such as what it was trained to detect, the dataset used for training, and when it was last updated,” the research authors write.

When a journalist uploads a suspicious-looking image to the AI detection tool and receives information that the photo is “70 percent human” and “30 percent artificial”, this tells them very little about which elements of the image have been digitally altered, and even less about its overall authenticity. Labelling an image even as partially “artificial” implies (potentially incorrectly) that the image has been significantly altered from its original state, but it does not tell you anything about how the nature, meaning or implications of the image might have been changed.

For example, tools may estimate that a photo has been digitally processed or created with the help of a machine simply because you inserted a “bad file” into it: a cropped, blurred, or compressed photo, with no metadata.

This is why it is a good idea to combine multiple methods when verifying content that is suspected to have been AI-generated. Faktograf journalists also use AI detection tools, with additional verifications.

For example, if the person shown in a deepfake video is a celebrity, we will contact that person to confirm the (non)authenticity of the content (1, 2). We will check geolocations, search for the source of the original photo or video, contact experts to get their opinion, and look for logical inconsistencies in the content.

AI features you can identify by yourself

As Faktograf previously wrote, in order to create a compelling synthetic copy of a person’s voice or physique, AI tools need material they can learn from. In other words, AI tools first need to be “fed” existing authentic recordings of the person whose identity is to be used to disseminate disinformation and fraud, which is why scammers have to resort to recordings that are easily accessible (e.g. on YouTube and similar services).

An article from The Guardian explaining how to identify deepfake and AI-generated content notes that videos, especially those featuring people, are harder to fake than photos or audio. Since the video includes image, movement, and sound, these elements often get mismatched.

When analyzing deepfake videos featuring human subjects, you need to focus on facial expressions, blinking, and the edges of the head (which may be jagged or pixelated). Pay special attention to lip movements, as current AI video tools usually change these movements to produce sentences which the person has never said in real life.

AI-generated human voices often have a softer tone, and the speech is less conversational than how we usually talk. In addition, you won’t hear a lot of emotion, pauses or catchphrases.

AI-generated photos of people also contain a number of inconsistencies. The most easily noticeable are those in faces, limbs and fingers. AI often generates excess fingers, severed limbs, and unnatural postures. Smiles can look unnatural, with teeth that are blurred or oddly shaped. The backgrounds of “photos” often contain distorted objects and unnaturally positioned shadows.

When it comes to content not featuring people, e.g. images of buildings or landscapes, such as the example above, there are a few things to consider. Artificial intelligence often generates images with dramatic lighting, strong colors, and exaggerated effects. We saw this in the example of the wildfires that affected the Los Angeles area at the beginning of the year.

In many of the videos and photos allegedly depicting the LA area, the movement of the fire appeared unrealistic, as did the shadows and clouds of smoke that were too uniform in shape and direction. They turned out to have been generated by digital tools (1, 2).

Social media policies

In response to growing concerns about disinformation, deepfake and non-transparency, social networks have begun to regulate the use of artificial intelligence. Regulations vary depending on the platform, i.e., the company behind it.

Meta, the company that owns Facebook, Instagram, and Threads, requires the inclusion of an AI label with content featuring a “photorealistic video or realistic sound that has been digitally created, modified, or altered, including through artificial intelligence.” Images created using Meta’s own AI tools are labelled as “Imagined with AI”. Visible markers, invisible watermarks, and metadata are included in these images to indicate their origin.

Meta also plans to label images generated by other companies’ (OpenAI, Google, Adobe) AI tools by detecting detection of industry-shared signals embedded in the content.

TikTok requires content creators to label AI-generated content. If the user does not do so, TikTok can automatically apply the “AI-generated” label to the content it identifies as completely generated or significantly edited by AI.

YouTube also instructs users to disclose if their content includes realistic AI-generated or synthetically altered footage. This is especially important when it comes to content related to sensitive topics such as health, news, elections or finance.

Some users of these platforms are engaged exclusively in creating content with AI and they communicate this transparently in their profile descriptions. However, as content is easily downloaded or screenshotted and moved from platform to platform (often without the author’s knowledge), downloaders and thieves sometimes deliberately distribute it without indicating that the content is not authentic. Faktograf encounters such cases very often.

Last year, a description and image of a bird called the “Madagascar egg-bearing thrush” appeared on social networks. Apparently, it is the only bird that does not lay eggs and build a nest. It stated that “at the end of pregnancy, the female lays two, and in rare cases three, almost perfectly round eggs in a special skin bag”.

The photo that was shared with this claim was created using artificial intelligence (AI) tools. It was published on March 26, 2024 on the Instagram profile “dallvador” (1, 2), whose description reads: “Exploring the intersection of art and artificial intelligence. All images exclusively generated by DALL·E from OpenAI” In the description of the visual, there is a story about the imaginary bird TitanNut, which “nature has gifted with a unique talent: attracting attention”. Apparently, it attracted attention with its “tiny legs and disproportionately large attributes”.

The cute, unusual baby bird quickly flew through the internet wastelands, separated from its creator’s original intention.

So even when you just want to play with AI tools, have a bit of fun, or impress your followers, keep in mind that AI-generated content can easily be misrepresented as real. It then becomes a powerful tool for deception and manipulation.

So, pause for a moment and think before you click “share”.